Lab 2 - Simple Chemotaxis#

This lab is designed to get you familiar with the basics of chemotaxis like that performed by bacteria, specifically e. coli.

We will compare our random agent from Lab 1 (Rando) with a gradient searcher who operates akin to a simple bacteria agent. We’ll call this agent Chemo.

There are three goals here.

Extrapolate on scent signals and gradients.

Examine exploration for targets with a variable scent in an open field.

Compare simple chemotaxis, with a Levy-walk structure, to Brownian motion.

Background#

In this lab we return to taxic explorations. We visit the sniff world (aka ScentGrid) and at what happens when sense signals are noisy.

Structured randomness and basic chemotaxis

In lab 1 we played with a random search agent that wandered using Brownian motion. Here we will introuce a new random agent that moves according to Levy walks.

A Levy walk is a continuing process of random movement where at each “step” of movement, a direction of and distance of movement is chosen randomly. The distance \(\delta_i\) of movement at each time step \(i\) is sampled from the random distribution as follows: \(\delta_i = {u_i}^{-\frac{1}{\gamma}}\), where \(u_i \sim N(\mu,\sigma)\) and \(\gamma > 1\).

Our chemotaxis agent (GradientDiffusionGrid) tries to directly estimate the gradient of the scent \(\nabla S\) by comparing the level of scent at the last grid position it occupied to the current scent level (\(s\)). By last position here we mean the last position when it moved last.

Our chemotaxis agent thus behaves as follows:

When the gradient is positive, meaning you are going “up” the gradient, the probabilty of changing course and doing a tumble is set to p pos.

When the gradient is negative, the probability of a doing a tumble is set to p neg. (See code below, for an example).

If the agent “decides” to tumble, the direction it takes is uniform random.

If no tumble is decided, the agent keeps moving in the direciton it was going before.

The length of travel now is just a single step on the grid. This makes our decision problem a lot simpler. Basically this is equivalent to making the diffusion parameter, \(D\), consistent across experiments.

Section 0 - Setup#

First let’s set things up for the two parts of the lab. You’ve done this before, so we don’t need to specify each installation and module step.

!pip install --upgrade git+https://github.com/coaxlab/explorationlib

!pip install --upgrade git+https://github.com/MattChanTK/gym-maze.git

import shutil

import glob

import os

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

from copy import deepcopy

import explorationlib

from explorationlib.local_gym import ScentGrid

from explorationlib.agent import DiffusionGrid

from explorationlib.agent import GradientDiffusionGrid

from explorationlib.run import experiment

from explorationlib.util import select_exp

from explorationlib.util import load

from explorationlib.util import save

from explorationlib.local_gym import uniform_targets

from explorationlib.local_gym import constant_values

from explorationlib.local_gym import ScentGrid

from explorationlib.local_gym import create_grid_scent

from explorationlib.local_gym import add_noise

from explorationlib.local_gym import create_grid_scent_patches

from explorationlib.plot import plot_position2d

from explorationlib.plot import plot_length_hist

from explorationlib.plot import plot_length

from explorationlib.plot import plot_targets2d

from explorationlib.plot import plot_scent_grid

from explorationlib.plot import plot_targets2d

from explorationlib.score import total_reward

from explorationlib.score import num_death

# Pretty plots

%matplotlib inline

%config InlineBackend.figure_format='retina'

%config IPCompleter.greedy=True

plt.rcParams["axes.facecolor"] = "white"

plt.rcParams["figure.facecolor"] = "white"

plt.rcParams["font.size"] = "16"

# Dev

%load_ext autoreload

%autoreload 2

Section 1 - Simulating noisy scents#

To build some intuition, let’s plot the “scent” emitted by a single target.

Full Scent#

Okay, let’s first visualize what the scent diffusion around each target looks like in the environment using the diffusion parameters we have set up.

target_boundary = (10, 10)

coord, scent = create_grid_scent_patches(

target_boundary, p=1.0, sigma=2)

plt.imshow(scent, interpolation=None)

Noisy Scent#

To corrupt the signal we can simply add more Gaussian noise. In this case we will use the add_noise function with a \(\sigma=1\).

noise_sigma = 1.0

coord, scent = create_grid_scent_patches(target_boundary, p=1.0, sigma=2)

scent = add_noise(scent, noise_sigma)

plt.imshow(scent, interpolation=None)

Doesn’t look resolvable does it? If you squint, maybe you can see it?

In order to confirm that there is signal there, let’s take a look at the average over 100 noisy targets.

amplitude = 1

noise_sigma = 1.0

num_samples = 100

scents = []

for _ in range(num_samples):

coord, scent = create_grid_scent_patches(target_boundary, p=1.0, sigma=2)

scent = add_noise(scent, noise_sigma)

scents.append(deepcopy(scent))

scent = np.sum(scents, axis=0)

plt.imshow(scent, interpolation=None)

So, pretty noisy but resolvable.

Question 1.1#

Adding noise and lowering detection probability both act to increase distortion to the signal channel that will be used by our agents. Will this help or hinder the agents that use sensory signals and/or information to drive their decisions? Explain your answer.

Answer:

(insert response here)

Question 1.2#

Re-run the simulations above, playing with the noise_sigma term, ranging from 1 to 10. What are the values for each parameter that lead to a complete loss in the scent signal (first plot) and how does it change what you can resolve when averaging across 1000 samples?

Answer:

(insert response here)

Section 2 - Using Basic Sensory Evidence To Explore#

In this section we take on the simplest form of sensory tracking: whether or not a chemical gradient is increasing or decreasing.

We start by setting up our basic environment.

# Noise scents

noise_sigma = 2 # We'll make it pretty noisy

# Shared

num_experiments = 100

num_steps = 500

seed_value = 5838

# ! (leave alone)

min_length = 1

num_targets = 50

target_boundary = (40, 40)

# Targets

prng = np.random.RandomState(seed_value)

targets = uniform_targets(num_targets, target_boundary, prng=prng)

values = constant_values(targets, 1)

# Scents

scents = []

for _ in range(len(targets)):

coord, scent = create_grid_scent_patches(

target_boundary, p=1.0, amplitude=1, sigma=2)

scents.append(scent)

# Env

env = ScentGrid(mode=None)

env.seed(seed_value)

env.add_scents(targets, values, coord, scents, noise_sigma=noise_sigma)

Again we are working a scent grid environment where each target emits noisy chemical signals (scents) according to our definitions above.

We will want the environment to be relatively sparse, to make the task somewhat difficult. Thus we will only generate 25 targets in a big open field.

Here’s an example of our environment

plot_boundary = target_boundary

ax = None

ax = plot_targets2d(

env,

boundary=plot_boundary,

color="black",

alpha=1,

label="Targets",

ax=ax,

)

We will use 2 agents in these sims:

Rando: Uses random Brownian motion search.

Chemo: Uses only the detected scent gradient to make a decision as to whether or not to tumble.

How do these agents work? Check the explorationlib code for details.

In the left panel on Colab, click on the file icon to access the file view for your Colab notebook.

Click the “..” file to go up one level (if necessary) and then navigate to

/usr/local/lib/python3.XX/dist-packages/explorationlib/agent.pyand double click the file (or just click the link in this bullet) to open up the library’s code for defining exploration agents.Find the DiffusionGrid and GradientDiffusionGrid functions to see how they are implemented.

# Agents

# Random search agent

diff = DiffusionGrid(min_length=min_length)

diff.seed(seed_value)

# Chemotaxis agent

min_length = 1 # Minimum step length on the grid

p_neg = 0.80 # Probability of jumping if gradient is decreasing

p_pos = 0.20 # Probabilty of jumping if gradient is increasing

chemo = GradientDiffusionGrid(

min_length=min_length,

p_neg=p_neg,

p_pos=p_pos,

)

chemo.seed(seed_value)

Now let’s run the experiments.

# Experiments

rand_exp = experiment(

f"rand",

diff,

env,

num_steps=num_steps,

num_experiments=num_experiments,

dump=False,

split_state=True,

seed=seed_value

)

chemo_exp = experiment(

f"chemo",

chemo,

env,

num_steps=num_steps,

num_experiments=num_experiments,

dump=False,

split_state=True,

seed=seed_value

)

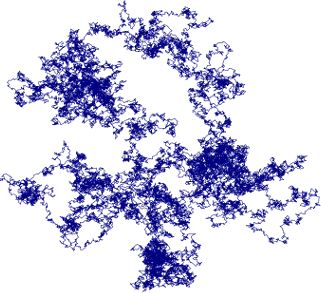

Let’s plot an example experiment. Here I’m choosing the second run for each agent.

plot_boundary = target_boundary

# -

num_experiment = 2

ax = None

ax = plot_position2d(

select_exp(rand_exp, num_experiment),

boundary=plot_boundary,

label="Rando",

color="red",

alpha=0.8,

ax=ax,

)

ax = plot_position2d(

select_exp(chemo_exp, num_experiment),

boundary=plot_boundary,

label="Chemo",

color="blue",

alpha=0.6,

ax=ax,

)

ax = plot_targets2d(

env,

boundary=plot_boundary,

color="black",

alpha=1,

label="Targets",

ax=ax,

)

Hard to distinguish their individual behaviors, but our agents seem to be exploring. It looks like this Chemo agent may be moving more than the Rando counterpart. So let’s look at the overall distance both agents cover.

# Results

results = [rand_exp, chemo_exp]

names = ["Rando", "Chemo"]

colors = ["red", "blue"]

# Score by eff

scores = []

for name, res, color in zip(names, results, colors):

l = 0.0

for r in res:

l += np.sum(r["agent_num_step"])

scores.append(l)

# Tabulate

m, sd = [], []

for (name, s, c) in zip(names, scores, colors):

m.append(np.mean(s))

sd.append(np.std(s))

# Plot means

fig = plt.figure(figsize=(4, 3))

plt.bar(names, m, yerr=sd, color="black", alpha=0.6)

plt.ylabel("Total run distance")

plt.tight_layout()

sns.despine()

Question 2.1#

Why is the Chemo agent covering more distance than the random diffusion agent?

Answer:

(insert response here)

Okay, now we can look at the overall performance of the two agents.

First, let’s look at the most extreme performance measure: deaths. If an agent does not reach at least one food pellet, then it dies at the end of the simulation. You’ve got to eat to survive afterall. Out of our set of simulations, how many deaths occured with each agent?

# Results

results = [rand_exp, chemo_exp]

names = ["Rando", "Chemo"]

colors = ["red", "blue"]

# Score by eff

scores = []

for name, res, color in zip(names, results, colors):

scores.append(num_death(res))

# Tabulate

m, sd = [], []

for (name, s, c) in zip(names, scores, colors):

m.append(np.mean(s))

sd.append(np.std(s))

# Plot means

fig = plt.figure(figsize=(4, 3))

plt.bar(names, m, yerr=sd, color="black", alpha=0.6)

plt.ylabel("Deaths")

plt.tight_layout()

sns.despine()

# Results

results = [rand_exp, chemo_exp]

names = ["Rando", "Chemo"]

colors = ["red", "blue"]

# Score by eff

scores = []

for name, res, color in zip(names, results, colors):

r = total_reward(res)

scores.append(r)

# Tabulate

m, sd = [], []

for (name, s, c) in zip(names, scores, colors):

m.append(np.mean(s))

sd.append(np.std(s))

# Plot means

fig = plt.figure(figsize=(5, 4))

plt.bar(names, m, yerr=sd, color="black", alpha=0.6)

plt.ylabel("Total reward")

plt.tight_layout()

sns.despine()

# Dists

fig = plt.figure(figsize=(5, 4))

for (name, s, c) in zip(names, scores, colors):

plt.hist(s, label=name, color=c, alpha=0.5, bins=np.linspace(0, np.max(scores), 15))

plt.legend()

plt.xlabel("Score")

plt.tight_layout()

sns.despine()

Question 2.2#

How do each of our agents perform across the performance measures we have chosen?

Answer:

(insert response here)

Question 2.3#

What explains this difference in performance? Be specific.

Answer:

(insert response here)

Question 2.4#

Right now we have set p neg and p pos to 80% and 20% respectively. This means that the probability of stopping a run and tumbling is 80% of the time when a gradient is not increasing and 20% of the time when it is. Let’s see how the performance changes when we make the algorithm almost deterministic. Write down the (approximate) performance numbers you have above (total run distance, number of deaths, and total reward), change p neg to 1.00 and p pos to 0.00. This means that whenever the gradient is increasing, our sniff valentino will keep running and when it decreases it will always tumble.

Wha the performance of our chemotaxic agent when you make this a deterministic algorithm? Why do you think this change in performance occured? Be specific.

Answer:

(insert response here)

IMPORTANT Did you collaborate with anyone on this assignment, or use LLMs like ChatGPT? If so, list their names here.

Write Name(s) here