Lab 1 - Random exploration#

This section has two goals.

To get you familiar with the python library we will be using for most of the semester to simulate and explore exploration.

Explore the utility of random exploration as an exploration strategy.

Google Colab is a simple free way to run python code. See the python chapter for more on it. Most of the chapters and assignments in this book have a button to open in Colab (see the rocket button at the top).

Background#

The explorationlib library has three primary parts for running simulations. Here we will outline the three and give a brief explanation for how they work.

Agents#

Agents are functions that have a specific set of 1) inputs, 2) computations, and 3) outputs. The input-outut form is defined by the environment (see below). For example, in the bacterial foraging environments the inputs are scent signals reflecting “food” and the ouputs are direction of movement along a 2-dimensional grid. For the bandit environments, the inputs are feedback signals (e.g., rewards) and the outputs are \(n\) actions, where \(n\) is the number of possible choice options. Agents have an internal state object that is updated according to various algorithms that we will play with in class.

Environments#

Environments define the contingencies that agents act on. Here we use the gym library for most of our simulations. There are two classes of environments we will work with: foraging grids and bandits. These will be explained in detail later.

Experiment#

The experiment function takes an agent, an environment, and a set of relevant parameters and executes the simulations.

Section 0 - Setup#

Use the button to open this assignment in a colab. Once it is open, if it is open, run all the cells. Read each cell, then run it, that is. This simple test of the colab is also a good but basic introduction to explorationlib, which is the basis for all the experiments and assignments you will see.

If there are no errors, celebrate and consider this assignment complete.

Note: I assume that you, reader, are familiar with python programming already. If you are not, see the Introduction to python assignment.

Install explorationlib#

Colab comes with many of the libraries we will need. It does not come with explorationlib. It’s a module we will be using, and was written to support this book. Let’s Install it.

!pip install --upgrade git+https://github.com/coaxlab/explorationlib

!pip install --upgrade git+https://github.com/MattChanTK/gym-maze.git

!pip install celluloid # for the gifs

Import some modules#

Let us begin by importing some modules from the standard library. These are just some general purpose tools we will use.

import shutil # For working with files

import glob # To help search for pathnames

import os # For working with the local operating system

import copy # We will be using deep copy a lot

import sys # For flexibility working with different OSs

Next we can import modules that are common to scientific programming in python.

import numpy as np # General purpose utility for math operations

import pandas as pd # For working with data frames

import seaborn as sns # A great plotting utility

import matplotlib.pyplot as plt # Another good plotting utility

Now let’s grab a bunch of functions from explorationlib to play with. If our install using pip above worked out, this next cell should run without error.

# All the explorers we will play with are called

# "agents"; a bit of computer science jargon

from explorationlib import agent

# The environments we will simulate live in a "gym"

from explorationlib import local_gym as gym

# Computational experiments are run with 'experiment'

from explorationlib.run import experiment

# Here are some tools to select, save, and load

# data from computational experiments

from explorationlib.util import select_exp

from explorationlib.util import load

from explorationlib.util import save

# A bunch of tools for plotting and for

# movie making

from explorationlib.plot import plot_position2d

from explorationlib.plot import plot_length_hist

from explorationlib.plot import plot_length

from explorationlib.plot import plot_angle_hist

from explorationlib.plot import plot_targets2d

#from explorationlib.plot import render_2d

from explorationlib.plot import show_gif

# A couple metrics for scoring how well, or poorly,

# an exploration experiment went.

from explorationlib.score import search_efficiency

from explorationlib.score import total_reward

#from explorationlib.score import average_reward

Before we go too far, let’s do some work to make the plots look nicer. We don’t have to do this, but having clear data visualization helps us better understand our results.

%matplotlib inline

%config InlineBackend.figure_format='retina'

plt.rcParams["axes.facecolor"] = "white"

plt.rcParams["figure.facecolor"] = "white"

plt.rcParams["font.size"] = "16"

Finally, let’s set up some better autocomplete and development tools in our notebooks. Again, optional. If this errors out, you might consider skipping it.

# config IPCompleter.greedy=True

# load_ext autoreload

# autoreload 2

Make a space for the data#

Make a folder called “data” to keep experimental data in. We will use it for all our examples.

WARNING When running in a colab, anything you save to “data/” will be lost as soon as you shutdown the colab. This is ok for now. We will cover ways to save your data permanently later on.

if not os.path.exists("data"):

os.makedirs("data")

Section 1 - Welcome to our virtual world#

We are now ready to make a little virtual world and little creatures in it that we can use as our test subjects.

For today we will work just with random search strategies. In particular, we want to show how random movements in a simple foraging context is an effective, if not ideal, strategy for simple organisms.

Environment#

The context that our agent will explore in is a 2-dimensional flat world environment. The environment is enclosed by walls on all sides, preventing our agent from escaping.

Throughout the space there are food sources sprinkled evenly and randomly throughout. Specifically we will distribute the food according to a uniform distribution, which means that every point in space within our environment has an equal likelihood of having a food pellet present. Every time the agent hits a food target they get 1 point.

The goal of the agent is to find at least one food source within the time alloted. More is obviously better, but all our agent needs is one little bite to survive.

Agents#

For most of this class we will be working with artifical agents that I call valentinos. Each valentino is a simulated agent that forages the little 2-dimensional virtual world. We have complete control over the valentino’s behavior and will expand their capabilities as the class progresses.

We will start simple today with an agent that uses Brownian motion to explore and find food. It is the simplest valentino possible. It doesn’t use sensory signals or other environmental information to move about. It just randomly diffuses throughout the environment. We’ll name this valentino Rando. Rando makes two decisions at every moment (i.e., time point). First, there is the decision of how far to move. We call this the step size and will use the parameter \(l_i\) to refer to the length of the step taken at the \(i^{th}\) moment. This will be sampled according to an gamma distribution with a scale parameter \(\theta\) (with \(\theta=2\) for now) and shape parameter \(k\) (\(k=2\) for now).

This gives us a probability distribution described like this:

Here \(\Gamma\) is what is known as the gamma function. But we can skip the details for now. Just don’t mix it up with the gamma distribution itself (which is described by the above equation that includes a gamma function).

So we say that each step \(l_i\) is sampled as:

Let’s see what this looks like.

# Set the scale parameter

scale_param = 0.5

shape_param = 4

# Generate gamma distribution data

data = np.random.gamma(scale=scale_param, shape=shape_param, size=1000)

# Create the plot

plt.figure(figsize=(4, 3))

plt.hist(data, bins=30, density=True, alpha=0.6, color='b', edgecolor='black')

# Add titles and labels

plt.title(f'Gamma Distribution (scale={scale_param}, shape={shape_param})')

plt.xlabel('Step size')

plt.ylabel('Frequency')

plt.legend()

plt.grid(True)

# Show the plot

plt.show()

Along with step length, the agent also must decide the direction of movement. We will use the parameter \(\theta\) to represent the angle of movement in polar coordinates. At each moment \(i\) the movement direction \(\theta_i\) will be sampled according to a uniform distribution ranging from 0 to \(2\pi\).

This means that at any given moment, Rando can turn in any direction in a \(360^{\circ}\) circle. We can visualie this distribution of angles pretty easily.

n_points=1000

n_bins=30

# Generate uniform distribution of angles from 0 to 2*pi

angles = np.random.uniform(0, 2*np.pi, n_points)

# Create the polar histogram

plt.figure(figsize=(4, 4))

ax = plt.subplot(111, projection='polar')

# Plot the histogram

ax.hist(angles, bins=n_bins, density=True, color='b', alpha=0.75, edgecolor='black')

# Add titles and labels

plt.title('Histogram of angles (0 to 2*pi)', va='bottom')

plt.grid(True)

# Show the plot

plt.show()

Task#

We want to run a simple experiment using explorationlib. We will simulate Rando’s behavior as they wander the environment seeking “food” resources that are detectable by scents (though our toy animal has no sensory abilities yet).

Instantiate a single Rando agent, in our 2-d foraging environment.

Run an experiment, for 500 steps.

Plot the environment and the agent’s path in it.

Score the agent, and reward (targets), and its efficiency.

Instantiate#

We can get things started by specifying all of the relevant parameters and objects.

# Parameters

exp_name = "data/explorationlib_rando.pkl" # where we can store the data

num_experiments = 1 # we only want to run one experiment

num_steps = 1000 # how many exploration steps in space?

scale = 0.5 # Diffusion constant

shape = 4 # Length of the skew (lower = more skew)

boundary = (10, 10) # a 2d world, 10 by 10

mode = "stopping" # stop when we hit a wall

num_targets = 100 # how many targets in the arena?

# Setup targets. Targets are an abstraction for the

# thing we are exploring to find. For now, all targets

# are just the number 1 placed randomly about.

targets = gym.uniform_targets(num_targets, boundary)

values = gym.constant_values(targets, 1)

# Setup agent and env

env = gym.Bounded(boundary=boundary, mode=mode)

env.add_targets(targets, values)

rando = agent.Diffusion2d(scale=scale, shape=shape)

Run#

We use the experiment function to execute the simulations.

(If you’ve been playing with this notebook for a while, then clear the old output first.)

# Cleanup old versions

for path in glob.glob(f"{exp_name}"):

os.remove(path)

# Run!

exp_data = experiment(

f"Rando",

rando,

env,

num_steps=num_steps,

num_experiments=num_experiments,

seed=5858,

dump=False,

)

Plot#

explorationlib has a set of built in visualization functions that we loaded at the beginning. We’ll use a few here to see how our agents are doing.

First let’s now see our environment. The plot below will show the foraging space for Rando and each dot indicates the location of a food source. Notice the uniform scattering of food across the environment.

# Plot the 2d env, and the targets (black dots)

plot_targets2d(env, boundary=boundary, title="Foraging Env.", figsize=(3,3))

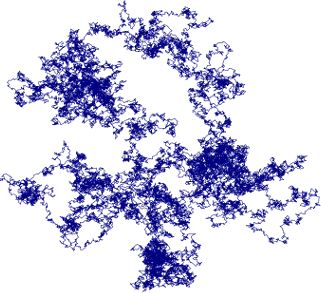

Now let’s take a look at Rando’s behavior. We can do this by plotting the positions that the agent (red line) took during this one example run.

# View size

plot_boundary = (20, 20)

# Agent

ax = None

ax = plot_position2d(

select_exp(exp_data,0),

boundary=plot_boundary,

label=f"Rando",

title=f"Brownian Exp.",

color="red",

alpha=0.6,

ax=None,

)

ax = plot_targets2d(

env,

boundary=boundary,

color="black",

alpha=1,

label="Targets",

ax=ax,

)

Notice that there are quite a few parameters you can play with to change the size of the plot, colors, and so on. See explorationlib.plot for all the options

Finally, let’s see how our distribution of step lengths looks. Here we will just plot the step at each jump (or turn) and the histogram of step lengths.

# View size

plot_boundary = (20, 20)

# Agent

ax=None

ax=plot_length(select_exp(exp_data,0),color='blue',figsize=(4,3))

ax=plot_length_hist(select_exp(exp_data,0),color='blue', loglog=False, figsize=(3.5,3))

This distribution should look very similar to the sampling distribution we used to make it (i.e., the gamma distribution above)

Score#

Now we can quantify Rando’s behavior in this simple experiment.

First we will look at total_reward, which counts how many times Rando ran into a food target. Recall that every time the agent hits a food source, they get 1 point.

# Total rewards (targets) collected

total_reward(exp_data)

So our agent hit their food this many times.

Next we will look at the efficiency of the search, which is just the number of movements taken by the agent over the number of food targets collected.

# How efficient (movements/target) was the search

search_efficiency(exp_data)

This means that our Rando agent took around 5 steps to hit a new food source.

We want this score to be as low as possible. Right now this number doesn’t mean much because we have nothing to compare Rando’s behavior to. We will come back to it in later labs.

Play#

Go back to the top of this section, change the random seed number in the cell where we ran the experiment and run it again. Each time you do you should generate a new exploration pattern. You’ll get different rewards and efficiency each time as well.

Question 1.1#

Rerun the simulation above but now have the agent run for 10,000 steps. What happens to the histogram (or distribution) of step lengths?

Answer:

Here is my very smart answer.

Question 1.2#

Repeat the experiment 10 times with different random seeds. Keep track of the two outcome measures, total reward and search efficiency. How much variation do you see from run to run?

Answer:

(insert response here)

Question 1.3#

Now set the random seed number back to 5858 and rerun the original simulation. Then change the number of steps in each simulation (the num_steps parameter) to 1000. Change the scale parameter to 2 and keep the shape parameter at 4. How do the two outcome measures (total reward and search efficiency) change? How does the distribution of step lengths change.

Answer:

(insert response here)

IMPORTANT Did you collaborate with anyone on this assignment, or use LLMs like ChatGPT? If so, list their names here.

Write Name(s) here